Use Helmfile for Deployment in Offline Environments

Helmfile is an orchestrator tool for collecting, building, and deploying cloud-native apps. Basically it’s the packager for helm chart based applications.

One of the interesting ideas I came along recently, is utilizing it for working in air-gapped environments, where access to the internet is not feasible.

Use Case

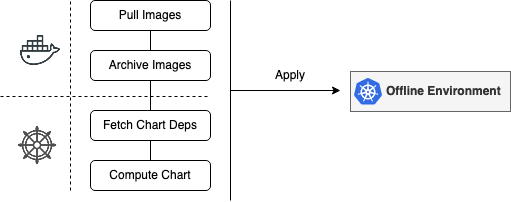

Let’s say you were deploying an application to a Kubernetes cluster. The idea is to package all dependencies and manifests beforehand in a local or control machine, then push the consolidated deployment directory to the offline machine.

The diagram below depicts the flow.

Example Deployment

The script below is a custom version of deploying Dex in offline mode, as an example.

A simplified helmfile would look like this:

# helmfile.yaml

repositories:

- name: dex

url: https://charts.dexidp.io

helmDefaults:

verify: true

wait: true

waitForJobs: true

timeout: 600

releases:

- name: dex

namespace: dex

createNamespace: true

chart: dex/dex

version: 0.11.1

values:

- config:

issuer:

{{ requiredEnv "OIDC_ISSUER" }}

storage:

type: kubernetes

config:

inCluster: true

oauth2:

skipApprovalScreen: true

staticClients: []

connectors: []

- ingress: {}

The following script saves archived version of the chart’s assets inside ./output.

# offline.sh

#!/bin/bash

IMAGE_VERSION=v2.34.0

IMAGE=ghcr.io/dexidp/dex

CHART_REPO=https://charts.dexidp.io

CHART=dex/dex

CHART_VERSION=0.11.1

docker pull ${IMAGE}:${IMAGE_VERSION}

# tagging is optional

docker tag registry.local.lan/${IMAGE}:${IMAGE_VERSION} \

${IMAGE}:${IMAGE_VERSION}

docker save registry.local.lan/{IMAGE}:${IMAGE_VERSION} \

-o ./output/images/{IMAGE}:${IMAGE_VERSION}.tar

helm repo add ${CHART_REPO}

helm pull ${CHART} --version ${CHART_VERSION} --destination ./output/charts/

Then, it should be a matter of executing the following sequence to prepare the final build directory:

$ BUILD_TIME=$(date +%Y-%d-%m-at-%H-%M)

$ ./offline.sh

$ export $(cat .env | xargs)

$ helmfile fetch

$ helmfile build \

> ./output/final-$BUILD_TIME.yml

In case helmfile binary is not available in the target environment, just template plain manifests.

$ helmfile template \

> ./output/final-${BUILD_TIME}.yml

Finally, on the production node you would run something similar to this:

$ docker load -i ./output/*.tar

$ docker push

$ helmfile sync --skip-deps \

-f ./output/final-*.yml

# In case `helm` is not available:

$ kubectl apply -f ./output/final-*.yml

As you can see, this method is extensible and can be generalized for any helm-based deployment. For the complete example listing, refer to the github repo here.

Conclusion

Certain security or compliance challenges imposed in air-gapped environments can make it hard to deliver cloud native deployments. Luckily, several CNCF projects (harbor, rancher, k3s, to name few) provide options to tackle such environments. Additionally, the presented approach above is generic for any helm application to be deployed properly in offline mode.